Controlled Dataset Expansion

Expand QA datasets through an evaluated loop that balances correctness, novelty, and drift.

What Is Dataset Expansion?

Dataset Expansion is the process of taking an existing QA dataset and adding new questions that respect the same style, scope, and quality as the original data.

The goal is not to generate arbitrary new questions, but to extend a dataset without changing what it is:

In qa-tools, Dataset Expansion is treated as a controlled, evaluated loop, not a one-shot generation step.

Why It Is Hard

At first glance, expanding a dataset sounds straightforward: "generate more questions like these."

In practice, small changes in prompt wording, scope, or evaluation criteria can quickly lead to significant issues.

Stylistic drift

New questions lose the original voice, tone, or structural complexity.

Topic expansion

The model drifts into unintended, adjacent, or irrelevant subject areas.

Duplication

Generating templated, repetitive, or semantically identical questions.

Quality regression

Subtle drops in answer accuracy, reasoning chains, or factual grounding.

For this reason, Dataset Expansion is not solved. This use case documents where it works today, where it breaks, and how qa-tools makes those limits visible.

How It Works

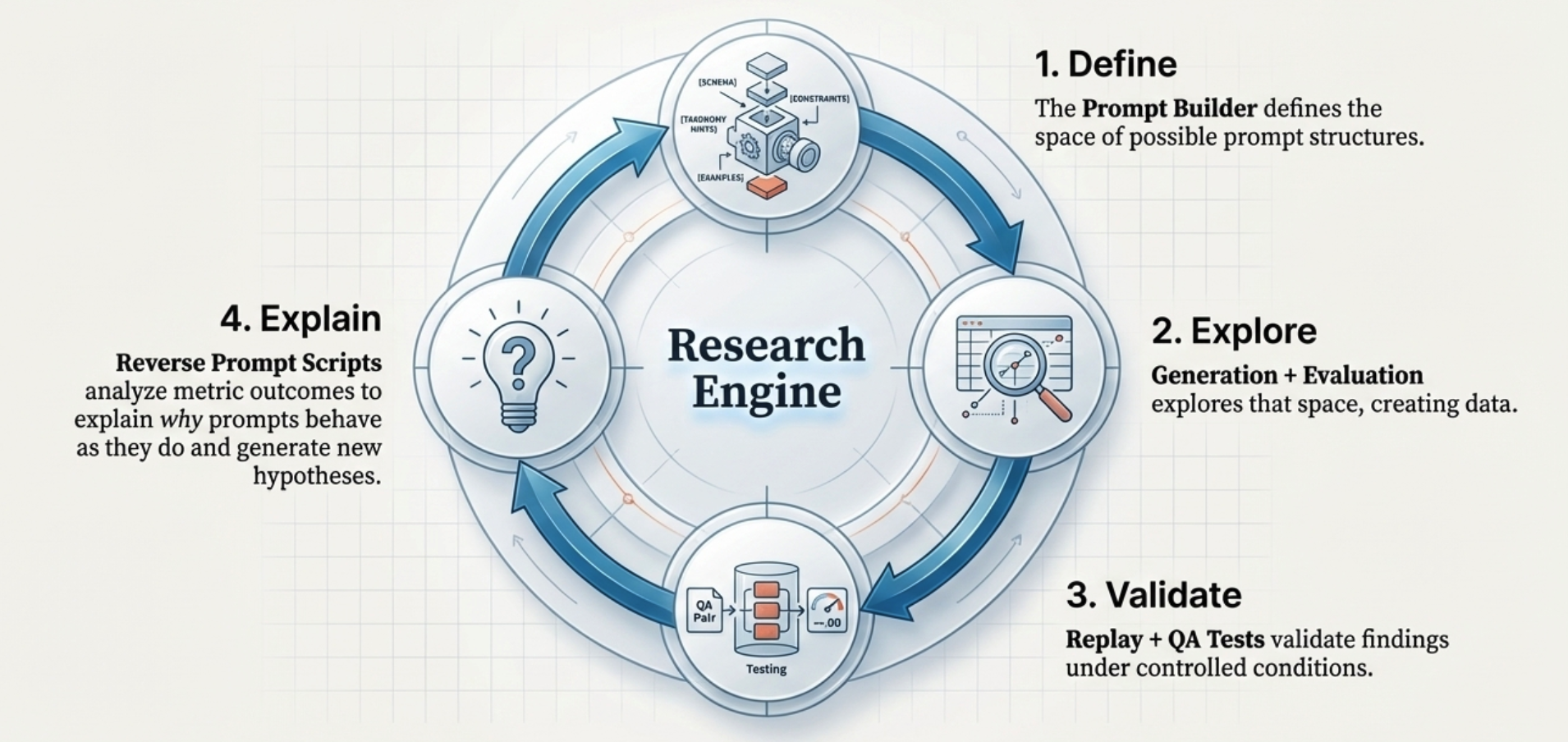

A closed, measurable loop — not one-shot generation.

When Expansion Works Best

Stable style

Consistent question form, tone, and answer format.

Clear topical scope

Domain vocabulary is consistent throughout.

Well-specified task

Short factual QA, definitions, API usage, etc.

Reliable judgment

Candidates can be evaluated using similarity and quality rubrics.

Enough semantic signal

Not ultra-short, not ambiguous content.

Common Failure Modes

Missing context

No grounding information for generated questions.

Ambiguity

Questions with multiple valid interpretations.

Over-broad scope

Topic expands → drift into unintended areas.

Template collapse

Low diversity in generated outputs.

Wrong thresholds

Evaluation criteria don't reflect task reality.

These are signals, not bugs.

What We Measure

Instead of claiming success, qa-tools measures behavior. For each dataset, we report:

Expansion is considered "healthy" when acceptance stabilizes without collapsing diversity.

Not all datasets are equally expandable

If expansion fails, the loop shows why — through low acceptance, drift, or novelty collapse. This is a governance signal, not a failure.